Google-Landmarks Dataset

Beschreibung

Der Google-Landmarks-Datensatz enthält eine große Anzahl von Bildern von Orten auf der ganzen Welt.

Zugriff über: google-landmarks-dataset

ID:(13823, 0)

Bibliotheken aufrufen die für die Arbeit mit DELF

Beschreibung

Bibliotheken aufrufen die für die Arbeit mit DELF notwendig sind:

from absl import logging import matplotlib.pyplot as plt import numpy as np from PIL import Image, ImageOps from scipy.spatial import cKDTree from skimage.feature import plot_matches from skimage.measure import ransac from skimage.transform import AffineTransform from six import BytesIO import tensorflow as tf import tensorflow_hub as hub from six.moves.urllib.request import urlopen

ID:(13814, 0)

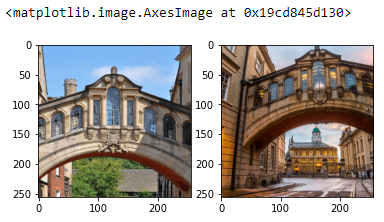

Bilder von Orten definieren

Beschreibung

Bilder von Orten definieren:

#@title Choose images images = 'Bridge of Sighs' #@param ['Bridge of Sighs', 'Golden Gate', 'Acropolis', 'Eiffel tower'] if images == 'Bridge of Sighs': # from: https://commons.wikimedia.org/wiki/File:Bridge_of_Sighs,_Oxford.jpg # by: N.H. Fischer IMAGE_1_URL = 'https://upload.wikimedia.org/wikipedia/commons/2/28/Bridge_of_Sighs%2C_Oxford.jpg' # from https://commons.wikimedia.org/wiki/File:The_Bridge_of_Sighs_and_Sheldonian_Theatre,_Oxford.jpg # by: Matthew Hoser IMAGE_2_URL = 'https://upload.wikimedia.org/wikipedia/commons/c/c3/The_Bridge_of_Sighs_and_Sheldonian_Theatre%2C_Oxford.jpg' elif images == 'Golden Gate': IMAGE_1_URL = 'https://upload.wikimedia.org/wikipedia/commons/1/1e/Golden_gate2.jpg' IMAGE_2_URL = 'https://upload.wikimedia.org/wikipedia/commons/3/3e/GoldenGateBridge.jpg' elif images == 'Acropolis': IMAGE_1_URL = 'https://upload.wikimedia.org/wikipedia/commons/c/ce/2006_01_21_Ath%C3%A8nes_Parth%C3%A9non.JPG' IMAGE_2_URL = 'https://upload.wikimedia.org/wikipedia/commons/5/5c/ACROPOLIS_1969_-_panoramio_-_jean_melis.jpg' else: IMAGE_1_URL = 'https://upload.wikimedia.org/wikipedia/commons/d/d8/Eiffel_Tower%2C_November_15%2C_2011.jpg' IMAGE_2_URL = 'https://upload.wikimedia.org/wikipedia/commons/a/a8/Eiffel_Tower_from_immediately_beside_it%2C_Paris_May_2008.jpg'

ID:(13815, 0)

Bild-Download- und Speicherfunktion

Beschreibung

Bild-Download- und Speicherfunktion:

def download_and_resize(name, url, new_width=256, new_height=256):

path = tf.keras.utils.get_file(url.split('/')[-1], url)

image = Image.open(path)

image = ImageOps.fit(image, (new_width, new_height), Image.ANTIALIAS)

return image

ID:(13816, 0)

Bilder herunterladen

Beschreibung

Bilder herunterladen:

image1 = download_and_resize('image_1.jpg', IMAGE_1_URL)

image2 = download_and_resize('image_2.jpg', IMAGE_2_URL)

plt.subplot(1,2,1)

plt.imshow(image1)

plt.subplot(1,2,2)

plt.imshow(image2)

ID:(13817, 0)

Verbinden mit DELF

Beschreibung

Verbinden mit DELF:

delf = hub.load('https://tfhub.dev/google/delf/1').signatures['default']

ID:(13818, 0)

Definition der DELF-Abfragefunktion

Beschreibung

Definition der DELF-Abfragefunktion:

def run_delf(image):

np_image = np.array(image)

float_image = tf.image.convert_image_dtype(np_image, tf.float32)

return delf(

image=float_image,

score_threshold=tf.constant(100.0),

image_scales=tf.constant([0.25, 0.3536, 0.5, 0.7071, 1.0, 1.4142, 2.0]),

max_feature_num=tf.constant(1000))

ID:(13819, 0)

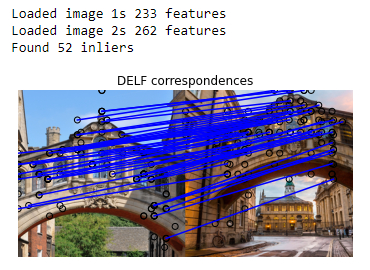

DELF ausführen

Beschreibung

DELF ausführen:

result1 = run_delf(image1) result2 = run_delf(image2)

ID:(13820, 0)

Definieren von einer Funktion zum Verknüpfen von Merkmalen

Beschreibung

Definieren von einer Funktion zum Verknüpfen von Merkmalen:

#@title TensorFlow is not needed for this post-processing and visualization

def match_images(image1, image2, result1, result2):

distance_threshold = 0.8

# Read features.

num_features_1 = result1['locations'].shape[0]

print('Loaded image 1s %d features' % num_features_1)

num_features_2 = result2['locations'].shape[0]

print('Loaded image 2s %d features' % num_features_2)

# Find nearest-neighbor matches using a KD tree.

d1_tree = cKDTree(result1['descriptors'])

_, indices = d1_tree.query(

result2['descriptors'],

distance_upper_bound=distance_threshold)

# Select feature locations for putative matches.

locations_2_to_use = np.array([

result2['locations'][i,]

for i in range(num_features_2)

if indices[i] != num_features_1

])

locations_1_to_use = np.array([

result1['locations'][indices[i],]

for i in range(num_features_2)

if indices[i] != num_features_1

])

# Perform geometric verification using RANSAC.

_, inliers = ransac(

(locations_1_to_use, locations_2_to_use),

AffineTransform,

min_samples=3,

residual_threshold=20,

max_trials=1000)

print('Found %d inliers' % sum(inliers))

# Visualize correspondences.

_, ax = plt.subplots()

inlier_idxs = np.nonzero(inliers)[0]

plot_matches(

ax,

image1,

image2,

locations_1_to_use,

locations_2_to_use,

np.column_stack((inlier_idxs, inlier_idxs)),

matches_color='b')

ax.axis('off')

ax.set_title('DELF correspondences')

ID:(13821, 0)

Verbindungen zwischen Merkmalen anzeigen

Beschreibung

Verbindungen zwischen Merkmalen anzeigen:

match_images(image1, image2, result1, result2)

ID:(13822, 0)

Ladestruktur für Abfrage

Beschreibung

Ladestruktur für Abfrage:

tf.reset_default_graph()

tf.logging.set_verbosity(tf.logging.FATAL)

m = hub.Module('https://tfhub.dev/google/delf/1')

# The module operates on a single image at a time, so define a placeholder to

# feed an arbitrary image in.

image_placeholder = tf.placeholder(

tf.float32, shape=(None, None, 3), name='input_image')

module_inputs = {

'image': image_placeholder,

'score_threshold': 100.0,

'image_scales': [0.25, 0.3536, 0.5, 0.7071, 1.0, 1.4142, 2.0],

'max_feature_num': 1000,

}

module_outputs = m(module_inputs, as_dict=True)

image_tf = image_input_fn(db_images)

ID:(13824, 0)

Bilder zur Analyse importieren

Beschreibung

Bilder zur Analyse importieren:

with tf.train.MonitoredSession() as sess:

results_dict = {} # Stores the locations and their descriptors for each image

for image_path in db_images:

image = sess.run(image_tf)

print('Extracting locations and descriptors from %s' % image_path)

results_dict[image_path] = sess.run(

[module_outputs['locations'], module_outputs['descriptors']],

feed_dict={image_placeholder: image})

locations_agg = np.concatenate([results_dict[img][0] for img in db_images])

descriptors_agg = np.concatenate([results_dict[img][1] for img in db_images])

accumulated_indexes_boundaries = list(accumulate([results_dict[img][0].shape[0] for img in db_images]))

d_tree = cKDTree(descriptors_agg) # build the KD tree

ID:(13825, 0)

Ort bestimmung

Beschreibung

Ort bestimmung:

# Array to keep track of all candidates in database.

inliers_counts = []

# Read the resized query image for plotting.

img_1 = mpimg.imread(resized_image)

for index in unique_image_indexes:

locations_2_use_query, locations_2_use_db = get_locations_2_use(index, indices, accumulated_indexes_boundaries)

# Perform geometric verification using RANSAC.

_, inliers = ransac(

(locations_2_use_db, locations_2_use_query), # source and destination coordinates

AffineTransform,

min_samples=3,

residual_threshold=20,

max_trials=1000)

# If no inlier is found for a database candidate image, we continue on to the next one.

if inliers is None or len(inliers) == 0:

continue

# the number of inliers as the score for retrieved images.

inliers_counts.append({'index': index, 'inliers': sum(inliers)})

print('Found inliers for image {} -> {}'.format(index, sum(inliers)))

# Visualize correspondences.

_, ax = plt.subplots()

img_2 = mpimg.imread(db_images[index])

inlier_idxs = np.nonzero(inliers)[0]

plot_matches(

ax,

img_1,

img_2,

locations_2_use_db,

locations_2_use_query,

np.column_stack((inlier_idxs, inlier_idxs)),

matches_color='b')

ax.axis('off')

ax.set_title('DELF correspondences')

plt.show()

ID:(13827, 0)